Hi! We have encountered an issue while using DepthKit Studio. How can we improve a quality of texture of captures in the face?

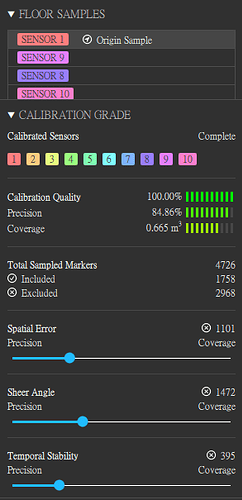

Down below I have attached a link to the exported video, the calibration grade results and pictures of cameras that we set up.

@Chun-ChiaHsu Thanks for sharing your calibration metrics, photos of your studio, and an example asset.

There are a couple of things I have spotted in what you have shared that can improve quality:

First, your hero sensor can come closer to the subject and be aimed slightly downward so that the subject takes up more of that sensor’s frame. This will result in higher resolution geometry and textures for the front of the capture. As it is positioned currently, much of the frame is wasted on unused headroom above the subject (above in red).

Also, I noticed that some sensors were set to different exposure and white balance settings at the time of your capture, as indicated by the color data not matching between some of the perspectives in the export. For future captures, be sure to configure all of the sensors’ Exposure an White Balance settings before capture so the RGB data is uniform across all sensors.

The calibration looks very good, but I noticed that the Floor Sample doesn’t look perfectly parallel to the floor, resulting is some floor geometry that is unable to be removed by the bounding box.

If you have multiple calibration charts (each displaying unique markers so there are no redundancies), you can gather a more accurate floor sample by laying many charts on the floor, ensuring they are all oriented in the same direction (see the arrow at the top of our calibration chart PDF), and then capturing or replacing your floor sample. Depthkit will average the positions of all of the markers in the scene, and make that the origin of the asset. This will enable you to more easily remove the entire floor using the bounding box during editing.

There are other ways to improve the quality of your captures, but to make recommendations, we will need to see the Surface Reconstruction Settings and Texture Blending Settings you are using to export your assets - Can you share screenshots of both of those panels for the asset you exported? We’ll take a look and go from there.

@CoryAllen We very much appreciated your precise and detailed response! You helped us a lot!

Here are screenshots of panels you mentioned:

@Chun-ChiaHsu Thanks for the screenshots. It looks like these settings are still set to their defaults, and adjusting them as follows will result in a higher quality asset.

Mesh Density: When exporting in the Combined per Pixel format, this should be increased to 200-300 voxels/m to preserve geometry detail, and won’t affect the size of the resulting exported video. (If alternatively you are exporting a textured mesh sequence (OBJs), this will affect output size, so adjust to find a balance of detail and size.)

Depth Bias: This setting is usually best left at or near the default setting of 8mm, but feel free to try other values to see if better aligns the texturing from different sensors.

Surface Infill: When your asset is captured using 10 sensor sensors as this one is, it is recommended to lower this setting, often to its minimum value, to reduce or eliminate infill artifacts that often appear in the gaps between larger sections of geometry (see area circled in red above).

Surface Smoothing: If after raising the Mesh Density there are any loose floating particles floating near areas like the subect’s hands, you can raise this value to ~4mm-5mm to reduce them, but increasing it too much will result in the loss of detail, so find a setting which achieves a balance.

Fixed Texture Blend: I was able to get a better result with a slightly higher value of 2.6.

Dynamic Texture Blend: I was able to get a better result with a significantly higher value of 11.7.

Texture Spill Correction Intensity: I was able to get a better result with a slightly higher value of 10.7.

Texture Spill Correction Feather: I was able to get a better result with a slightly higher value of 1.7%.

These should be good starting points, but feel free to adjust based on what looks best to you.

Thanks for the feedback!

Cory Allen via Depthkit Community <notifications@depthkit.discoursemail.com>於 2024年1月4日 週四,上午12:28寫道: